J.D. Vance is the next Republican candidate for Senator from Ohio.

The same day, a SCOTUS decision to overturn Roe v. Wade was leaked.

It’s been more than a year since the insurrection at the Capitol.

It’s been 5 1/2 years since we elected Trump.

42 years since we elected Reagan.

50 years since we elected Nixon.

The GOP is a corrupt organization with people like Vance that don’t believe anything they say. Today’s Republican party, which has been building to this point for over 50 years, only cares about three things:

Power.

Greed.

Ego.

The visible individuals who lead and control the party use doublespeak, lies, and and prevarication to promote…

Fascism.

White supremacy.

Extractive hyper-capitalism.

Toxic masculinity.

Anti-christian Christianity.

They continue to build on decades of amplification and acceleration of…

Concentration of wealth in a few and the extraction of wealth from, especially, the most vulnerable.

Promotion of violence, locally through guns and militaristic agencies and globally through defense spending and war.

Disenfranchisement, oppression, exploitation, and elimination of Black people, as well as indigenous and other people of color.

Harassment and assault on women and LGBTQI+ people, their bodies, their voices, and their agency.

Erosion, devaluation, and loss of trust in democratic institutions, values, rights, and freedoms.

Deconstruction of regulation and safety nets.

Coopting and subjugation of the media.

Destruction of the environment, ecosystems, and species.

Many of us push back against this. The Republicans pushing this agenda are a minority of the US population. They do not represent the will and desires of us. We need to do more.

Wake up.

Think globally, act locally.

Protest and activate.

Nurture and protect the vulnerable.

Tax the rich.

Prosecute the corrupt.

Get out the vote.

Throw the bums out.

Get elected to local and state offices.

Change legislative and bureaucratic processes.

Pass meaningful legislation.

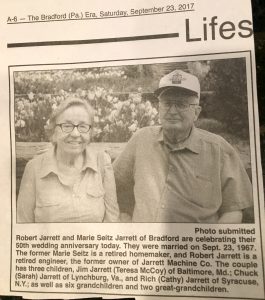

Metaphorically, we have mutated into a virulent strain of infection in the world. We need powerful antibiotics and more robust immune systems. If we don’t slow and reverse this progression, we’ve nowhere else to go. The host, our Earth, our species and many others, will die out. I don’t want to witness and experience this in my lifetime. Or my children’s or grandchildren’s.